When someone like me from an older generation is confronted with a new piece of technology, inevitably we must turn to a younger person for help. What is described as ‘user friendly’ is usually so only to those who are already familiar with the ways of the machines. ‘Well, they have grown up with the new technology’ we say, by way of excuse. And that is true, but might it also be true that they have grown up, not only with, but also in competition with, the new technology? Consider the experience of infants in recent decades. They have learned from experience that their cries get the attention of parents, but at the same time they have found themselves in competition with mobile phones whose ring tones are set to call attention to themselves even against the background of considerable ambient noise, just like babies’ cries. And they have seen these gadgets lifted up to mothers’ cheeks, and those mothers looking attentively (lovingly?) at screens, just as the infants desired to be so regarded. Even in the most intimate moments of mothers’ quality time with their children, that third other is always present, and always likely to interrupt with its incessant demand for attention.

I am not trying to lay blame, to accuse mothers of harming their children (though here the notorious line from Philip Larkin’s poem might be quoted) but am simply asking what happens to people who learn how to be human, how to love and relate, in this context. What happens to children formed and raised in a milieu in which they must compete for attention, not with other children, but with mysterious talking and crying machines? What do they learn about priorities in relationships, about securing their own identity and interests and desires in this complex world? How is interpersonal communication fostered or frustrated when it is so structured by the mediating technology? This is the kind of question that arises when we consider AI in the context of common goods.

The consequences of the denial of face-to-face encounter of pupils with teachers and children with their peers, required by lockdown in response to Covid-19, are becoming evident. Teachers now observe the effects of this interruption to the normal processes of socialisation. Children lack the ordinary skills of social interaction, that they would formerly have been expected to bring to their school experience. But might it be the case that our reliance on gadgets for communication and socialising is also likely to have a negative impact on our culture because in some way draining it of the shared capabilities and skills and knowledge that makes a decent social existence possible? This question can be sharpened specifically in relation to Artificial Intelligence and its increased usage in various domains of social life. Is our common good at risk from AI and its applications? To deal with this question, we need to specify what is involved in our common goods, and how AI might jeopardise them.

Distinction of Common Goods

We can consider two cases of common goods, practical and perfective. The practical sense is that wherever people cooperate, they have a good in common, a common good. That good in common might be a private good (school places for our children), a club good (networks for alumni of our school), a collective good (any school’s ambition for its students), or a public good (high levels of educational attainment conditioning political discourse and respect for the rule of law). Perhaps the less obvious but more important way in which cooperation is for a common good is the perfective sense of good.

Again, taking schooling as an example, we can see how education as accomplishment of persons and communities, enables people to be more and to realise to a greater extent their human potential. What fulfils people is for their good, enabling them to flourish. Hence a perfective sense of the good is relevant, that might not be at the forefront of our thinking when we collaborate in some project. Then we focus on the task in hand, but our performance also shapes us and our relationships.

When considering the relationship between AI and common goods it is understandable that people would spontaneously begin with common goods in the practical sense of the objectives they hope to achieve by relying on AI. There is the project of reducing drudgery and repetitiveness in work, so that machines can do what humans have had to do. There are projects of increasing effectiveness and efficiency as more accurate analyses and diagnoses are made possible, factoring out the fallibility of human processes. There are ambitions of increasing fairness when the processing of vast quantities of paper such as application forms, whether for jobs, or for mortgages, or for credit, or university places, can be done without risk of human tiredness or boredom or prejudice distorting the process. The list goes on. Many worthwhile objectives can be pursued with the use of AI bringing accuracy, reliability, efficiency, and fairness to the undertaking.

But what about the perfective common goods at stake? What impact is the use of AI having or likely to have on the development of human persons, and on the quality of the relationships between persons who interact with one another mediated by the relevant technology? What is it doing or likely to do to community, to the quality of the cooperation itself that is in turn capable of being an instance of flourishing, a perfective realization of human potential? In various areas in which AI is currently being deployed we find questions being raised that touch on these perfective goods in common. These are still questions, but sufficiently concerning as to suggest that we cannot be indifferent to the possible answers.

One area of concern is that signalled by the potential of large language models that are so sophisticated they can produce very plausible and convincing text in several genres. ChatGPT developed by OpenAI fascinates with its ability to engage in a conversation with the user, answering questions and producing convincing answers. This can be very useful, but what is its impact on our understanding of what is going on in a conversation, or in written communication? If I no longer must assume that there is another human being collaborating with me in such interaction, does it impact on how I participate in communication when other persons are involved? If language is no longer exclusively a medium between people, do I then hear words differently when I’m aware they could be generated by a machine and not spoken by a person? If the voice I hear on the phone might not be that of a person, does that reinforce a tendency to treat the speaker in an instrumental way, whether a machine or not?

The absence of persons from relevant decision making when aided by AI is another concern. The superiority of AI aided medical diagnoses (because standardised based on large data inputs) over those made by physicians is well established. But patients are concerned about the implications for treatment when it is not another person, a physician with compassion as well as competence, making the decision. Similarly, when decisions about the granting of credit, or mortgages for house-buying, or jobs, are made by machines benefiting from analyses of large databases, the people whose applications are rejected can be upset that decisions with life changing consequences for them are taken by a machine and not another person. Even international human rights adjudication can now be facilitated by automated management of documentation. One might argue that the benefits of fairer and more reliable decisions outweigh the distress occasioned for some. But that is not the issue here. The issue is what we are doing to our common life, and to the willingness of people to collaborate, and comply, and accept the burdens along with the benefits of social cooperation, when machines and not human partners seem to be in control.

Among the willingness to accept burdens in social life is Losers’ Consent, the willingness to accept unfavourable outcomes of democratic decision making, a fundamental precondition for peaceful democracy. Is it also jeopardised by the undermining of social bonds occasioned by the replacement of human decisions makers with AI powered machines? Formation for human relationships and its reinforcement through social interaction is a perfective common good that is also a public good. Human capacities for bonding are formed and strengthened through daily encounters. Now we must face the possibility that those capacities are not reinforced but are instead jeopardised when our daily social encounters are increasingly with machines, and not with people. Have some of the infants who once competed with iPhones for a touch of mother’s cheek become adults who prefer to relate online?

Sign up to receive blogs and book reviews in your email

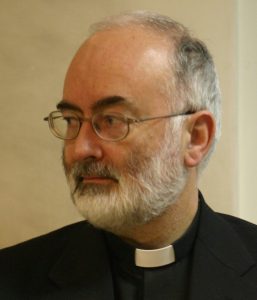

Dr Patrick Riordan, SJ, an Irish Jesuit, is Senior Fellow for Political Philosophy and Catholic Social Thought at Campion Hall, University of Oxford. Previously he taught political philosophy at Heythrop College, University of London. His 2017 book, Recovering Common Goods (Veritas, Dublin) was awarded the ‘Economy and Society’ prize by the Centesimus Annus Pro Pontifice Foundation in 2021. His most recent books are Human Dignity and Liberal Politics: Catholic Possibilities for the Common Good (Georgetown UP, 2023) and Connecting Ecologies: Integrating Responses to the Global Challenge (edited with Gavin Flood [Routledge, 2024]).